Freshly Printed - allow 6 days lead

Couldn't load pickup availability

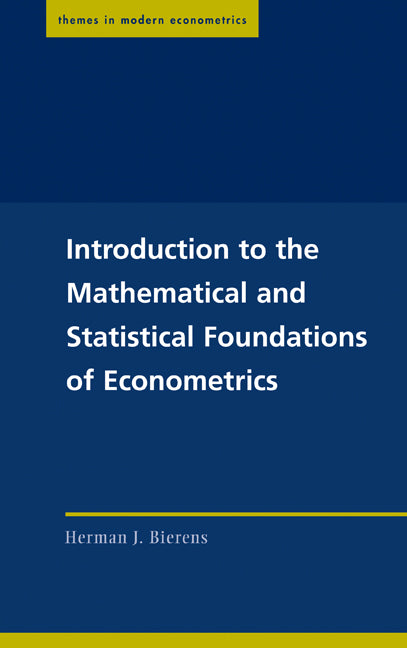

Introduction to the Mathematical and Statistical Foundations of Econometrics

This book is intended for use in a rigorous introductory PhD level course in econometrics.

Herman J. Bierens (Author)

9780521834315, Cambridge University Press

Hardback, published 20 December 2004

344 pages, 19 b/w illus. 12 tables

22.9 x 15.2 x 2.4 cm, 0.68 kg

'The objective of this book is to use it as an introductory text for a Ph.D. level course in Econometrics. … Appendixes are self contained with review which are easy to learn and understand. As a whole, I consider this book as unique and self-contained and it will be a great resource for researchers in the area of Econometrics.' Zentralblatt MATH

This book is intended for use in a rigorous introductory PhD level course in econometrics, or in a field course in econometric theory. It covers the measure-theoretical foundation of probability theory, the multivariate normal distribution with its application to classical linear regression analysis, various laws of large numbers, central limit theorems and related results for independent random variables as well as for stationary time series, with applications to asymptotic inference of M-estimators, and maximum likelihood theory. Some chapters have their own appendices containing the more advanced topics and/or difficult proofs. Moreover, there are three appendices with material that is supposed to be known. Appendix I contains a comprehensive review of linear algebra, including all the proofs. Appendix II reviews a variety of mathematical topics and concepts that are used throughout the main text, and Appendix III reviews complex analysis. Therefore, this book is uniquely self-contained.

Part I. Probability and Measure: 1. The Texas lotto

2. Quality control

3. Why do we need sigma-algebras of events?

4. Properties of algebras and sigma-algebras

5. Properties of probability measures

6. The uniform probability measures

7. Lebesque measure and Lebesque integral

8. Random variables and their distributions

9. Density functions

10. Conditional probability, Bayes's rule, and independence

11. Exercises: A. Common structure of the proofs of Theorems 6 and 10, B. Extension of an outer measure to a probability measure

Part II. Borel Measurability, Integration and Mathematical Expectations: 12. Introduction

13. Borel measurability

14. Integral of Borel measurable functions with respect to a probability measure

15. General measurability and integrals of random variables with respect to probability measures

16. Mathematical expectation

17. Some useful inequalities involving mathematical expectations

18. Expectations of products of independent random variables

19. Moment generating functions and characteristic functions

20. Exercises: A. Uniqueness of characteristic functions

Part III. Conditional Expectations: 21. Introduction

22. Properties of conditional expectations

23. Conditional probability measures and conditional independence

24. Conditioning on increasing sigma-algebras

25. Conditional expectations as the best forecast schemes

26. Exercises

A. Proof of theorem 22

Part IV. Distributions and Transformations: 27. Discrete distributions

28. Transformations of discrete random vectors

29. Transformations of absolutely continuous random variables

30. Transformations of absolutely continuous random vectors

31. The normal distribution

32. Distributions related to the normal distribution

33. The uniform distribution and its relation to the standard normal distribution

34. The gamma distribution

35. Exercises: A. Tedious derivations

B. Proof of theorem 29

Part V. The Multivariate Normal Distribution and its Application to Statistical Inference: 36. Expectation and variance of random vectors

37. The multivariate normal distribution

38. Conditional distributions of multivariate normal random variables

39. Independence of linear and quadratic transformations of multivariate normal random variables

40. Distribution of quadratic forms of multivariate normal random variables

41. Applications to statistical inference under normality

42. Applications to regression analysis

43. Exercises

A. Proof of theorem 43

Part VI. Modes of Convergence: 44. Introduction

45. Convergence in probability and the weak law of large numbers

46. Almost sure convergence, and the strong law of large numbers

47. The uniform law of large numbers and its applications

48. Convergence in distribution

49. Convergence of characteristic functions

50. The central limit theorem

51. Stochastic boundedness, tightness, and the Op and op-notations

52. Asymptotic normality of M-estimators

53. Hypotheses testing

54. Exercises: A. Proof of the uniform weak law of large numbers

B. Almost sure convergence and strong laws of large numbers

C. Convergence of characteristic functions and distributions

Part VII. Dependent Laws of Large Numbers and Central Limit Theorems: 55. Stationary and the world decomposition

56. Weak laws of large numbers for stationary processes

57. Mixing conditions

58. Uniform weak laws of large numbers

59. Dependent central limit theorems

60. Exercises: A. Hilbert spaces

Part VIII. Maximum Likelihood Theory

61. Introduction

62. Likelihood functions

63. Examples

64. Asymptotic properties if ML estimators

65. Testing parameter restrictions

66. Exercises.

Subject Areas: Applied mathematics [PBW], Economic statistics [KCHS], Econometrics [KCH]