Freshly Printed - allow 4 days lead

Couldn't load pickup availability

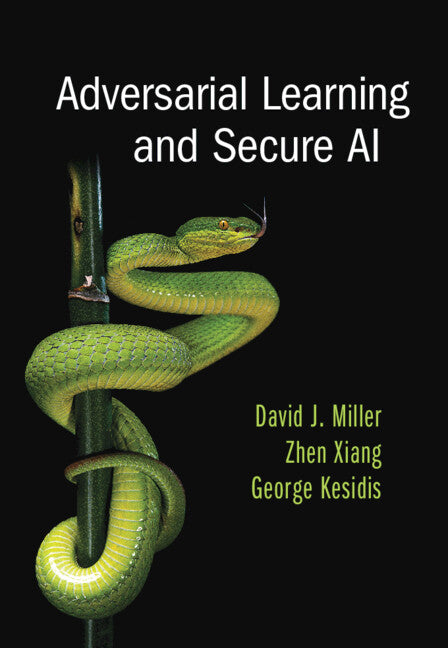

Adversarial Learning and Secure AI

The first textbook on adversarial machine learning, including both attacks and defenses, background material, and hands-on student projects.

David J. Miller (Author), Zhen Xiang (Author), George Kesidis (Author)

9781009315678, Cambridge University Press

Hardback, published 31 August 2023

350 pages

25.1 x 17.4 x 2.3 cm, 0.86 kg

'In a field that is moving at break-neck speed, this book provides a strong foundation for anyone interested in joining the fray.' Amir Rahmati, Stony Brook

Providing a logical framework for student learning, this is the first textbook on adversarial learning. It introduces vulnerabilities of deep learning, then demonstrates methods for defending against attacks and making AI generally more robust. To help students connect theory with practice, it explains and evaluates attack-and-defense scenarios alongside real-world examples. Feasible, hands-on student projects, which increase in difficulty throughout the book, give students practical experience and help to improve their Python and PyTorch skills. Book chapters conclude with questions that can be used for classroom discussions. In addition to deep neural networks, students will also learn about logistic regression, naïve Bayes classifiers, and support vector machines. Written for senior undergraduate and first-year graduate courses, the book offers a window into research methods and current challenges. Online resources include lecture slides and image files for instructors, and software for early course projects for students.

Contents

Preface

Notation

1. Overview of adversarial learning

2. Deep learning background

3. Basics of detection and mixture models

4. Test-time evasion attacks (adversarial inputs)

5. Backdoors and before/during training defenses

6. Post-training reverse-engineering defense (PT-RED) Against Imperceptible Backdoors

7. Post-training reverse-engineering defense (PT-RED) against patch-incorporated backdoors

8. Transfer post-training reverse-engineering defense (T-PT-RED) against backdoors

9. Universal post-training backdoor defenses

10. Test-time detection of backdoor triggers

11. Backdoors for 3D point cloud (PC) classifiers

12. Robust deep regression and active learning

13. Error generic data poisoning defense

14. Reverse-engineering attacks (REAs) on classifiers

Appendix. Support Vector Machines (SVMs)

References

Index.

Subject Areas: Computer networking & communications [UT]